I have been asked to set up a Logic app in Azure (That is Power Automate for anyone outside Azure) to copy specific file(s) from a Sharepoint folder and add to an Azure Data Lake.

The first example file is around 16,00 rows and not likely to grow too significantly. This is the same with the other files.

There is a specific use case behind this First logic app:

- The Data in the csv file(s) is updated every day so the file name remains the same

- We need to copy the File and overwrite the file in the data lake every day after the task has been done to update the Sharepoint File (Around 5PM every day)

- we want the Logic App to run via Data Factory

- Once the logic app has run we want to trigger the pipeline to populate the SQL database from the file in the data lake.

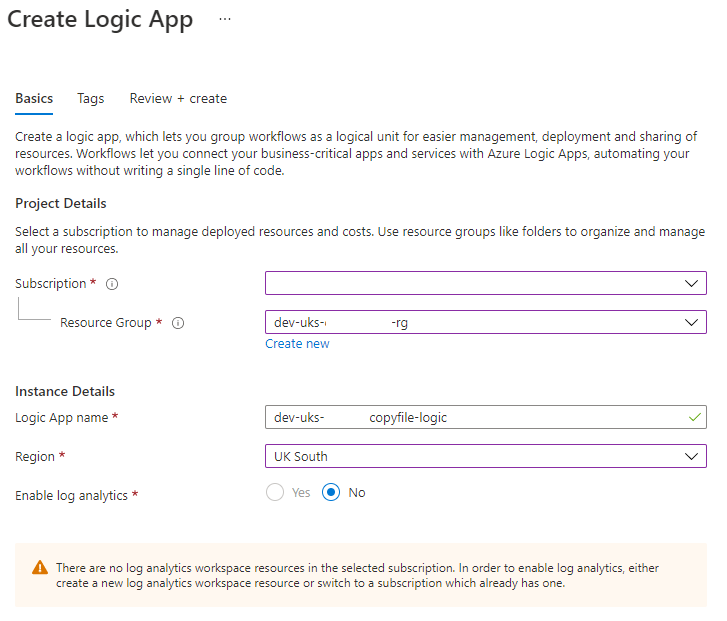

Set up the Logic App

In azure go to Logic App and New

Log Analytics: to get richer debugging information about your logic apps during runtime

Consumption Plan: Easiest to get started and fully managed (Pay as you go model). Workflows increase slowly or are fairly static

Standard Plan: Newer than the consumption plan. Works on a single tenant. Works on a flat monthly fee which gives you potential cost savings.

Create the Logic App

Once you have added tags and created its time to create the logic App

Because we want to trigger in Azure Data Factory we want to go for When a HTTP request is triggered

The HTTP Post URL will be used in Data Factory to trigger the Logic App.

I have added a JSON Schema that supports some of the important information for this project. Like Container for the data lake, Folder , File name and isFolder (Which becomes more important a little later.

{

"properties": {

"Container": {

"type": "string"

},

"fileName": {

"type": "string"

},

"folder": {

"type": "string"

},

"isFolder": {

"type": "boolean"

}

},

"type": "object"

}

List Folder

Now we want to List Sharepoint folder. So create a new step and search for List Folder

Returns files contained in a Sharepoint Folder.

Next you have to Sign into Sharepoint with a valid account that has access to the Sharepoint site.

Here is where we have a question. For this test, my own username and password has been used but obviously I change my password at certain points which means that this will need manually updating when this happens.

What we need is a way of logging into Sharepoint that isn’t user related and we can use within the logic app. This needs further thought and investigation.

When you log in you create a Sharepoint API connection in Azure Resource Group

To get the site address you can go into Sharepoint, Click on the … against the file and copy link.

The link needed amending slightly because it needs to be tenant.sharepoint.com/sites/ProjectMainArea

If you have access you should then be able to click the folder against File Identifier and select the correct area

For Each

Next Stop, For each ‘Body’ from the List Folder step. We get the File Content. Go to Next Step and choose the For Each Condition (Because there will be multiple files)

Get File Content

Now We want to Get File Content From Sharepoint

Gets File contents using the File Identifier. The contents can be copied somewhere else or used as an attachment

You need to access the same Sharepoint site address as before. Then click on File identifier and choose ID from the Sharepoint Dynamic Content pop up

so here we can see that from the list folder step we have lots of file metadata we can use like DisplayName. ID, LastModified etc.

We know we need ID for Get File Content

We are at a point where we can run this now as a test.

Note that so far we have this set up

but we hit specific issues

Status 404 File not found

cannot write more bytes to the buffer than the configured maximum buffer size of 10457600

So we have two issues to resolve and after a bit of help on the Q&A Forums we find out that:

List Files “Returns files contained in a Sharepoint Folder. ” Actually also returns folders which are erroring because they are not files

Logic Apps aren’t really set up for large files. There doesn’t appear any way we can get past the size issue. So we need to check our files and also think of ways to bring through smaller data sets if needs be.

Thankfully our files are way below the threshold and the business thinks that they won’t increase too much.

So here is where we can start applying criteria, which we want to do anyway because we only want certain files.

- If its a folder we don’t want to use it

- If its over 10457600 in size we don’t want to use it

- Only bring through files called…….

So we need to change our For Each

Within For each add a new step and search for Condition

And add your conditions (And Or)

Then you can move the Get File content into True

So If IsFolder is false and the size is less than 10457600 we can grab file A OR File B.

When you now test this Logic App Get File content should succeed with most not even hitting the criteria.

Create Blob

Finally within the True section we need to add the file to our Data Lake.

Search for Create Blob

Here you have to sign into your Blob Storage which again creates another API Connection in Azure

You have to supply the Storage account name and choose an authentication type. Access Key has been used, the details added here. Normally in data Factory the Access Key is obtained through a Key Vault so, more information is needed to come up with the most secure way of doing this. There are two other authentication types to choose from.

More investigation is needed into these other approaches.

Now we can do a full test of the Logic App

Testing the Logic App

When you trigger the logic app

The Body contains a long list of every object. Really handy to know what the details are inside this action.

To test this was copied into a word document.

Next comes the Get File Content

Now most of the files don’t satisfy the condition.

Next was clicked to get to a file in Get File Content (first one appeared as number 32)

And now we can see the Body of the Create Blob. (This happens for every file specified in the criteria

And if you use Microsoft Storage Explorer app you can check that they have indeed been updated (Either its a new file or it updates what is already there)

Data Factory

Now we have saved the Logic App we want to trigger it in Data Factory

Create a pipeline and choose a web activity

Copy the URL from the Logic App and paste here

For the Body I simply used the Simply JSON at the start of this article.

Now you can trigger this pipeline along with all your other pipelines to run the Data into your Data Lake and then into SQL to be used for Analytics.

This is so helpful! blogs like this are what keep our community going 🙂 Thats why I would like you to take some time to check out Katpros blog! I am sure we can learn something from each other

LikeLike