Any time there is a big change in technology, there is a steep learning curve to go with it. Since Microsoft announced Fabric in May 2023 We have been working hard on getting up to speed with how Fabric works and how it changes the nature of what we do.

- What new architectures are available for us to work with?

- How it changes working with Power BI?

- How we create our staging and reporting data before loading into Power BI?

- How Pipelines differ from data Factory, and pipelines in Synapse?

- Keeping up with the monthly updates across Fabric

In my previous post “The Microsoft data Journey so far. From SQL Server, Azure to Fabric” I looked at my own journey working with on premises Microsoft services through to Fabric. Along with identifying all the key fabric areas.

This post is about my initial discoveries whilst actively working with Fabric, specifically using the Lake house, and ways of developing your learning within the Microsoft Fabric space.

In Part 1, we explore various topics within the Fabric Data Engineering capabilities. In Part 2, we will delve into Fabric Power BI and semantic modelling resources.

Analytics Engineer

Analytics engineer is a new title to go along with Fabric (SaaS) end to end analytics and data platform. One of the first things to do was to go through Microsoft’s Analytics Engineering learning pathway, with the aim of taking the exam.

I personally took my time with this because I wanted to get as much out of it as possible and passed the exam on the 24th of June 2024. Making me a certified Fabric Analytics Engineer.

I highly recommend going through the learning pathway https://learn.microsoft.com/en-us/credentials/certifications/fabric-analytics-engineer-associate/?practice-assessment-type=certification to get equipped with the skills needed for being an Analytics Engineer,

You learn about Lakehouse, Data Warehouses. Notebooks. Pipelines. Semantic models and Reporting throughout the course.

But the main question is, What is Fabric Analytics Engineering, and how does it differ from Data Engineering and Data Analysis?

Data Engineering

- Designing and building data pipelines.

- Focus on maintaining the infrastructure

- Collecting data from multiple systems and data storage solutions

- Skills in SQL, Python, Devops GIT, ETL tools, Database management etc

Analytics Engineering

- Specialises in analytics solutions. Data engineers have a broader focus.

- Collaborate closely with Data Engineers, business process knowledge owners. Analysts etc

- Transforming data into reusable assets

- Implementing best practices like version control and deployment

- Works with lakehouses, Data Warehouses, Notebooks, Dataflows, Data pipelines, Semantic models and Reports

- Skills in SQL, DAX, Pypark, ETL Tools etc

Analytics Specialist

- Focuses on analysing data and data insights to support decision making

- Creates reports and Dashboards and communicates findings

- Identifies trends and patterns along with anomalies

- Collaborates with stakeholders to understand their needs.

- Skills in visualisation tools like power BI

As you can see, the Analytics Engineer is a bridge between Data Engineering and Analytics. The Analytics Specialist is sometimes seen as an end to end developer with knowledge across all of these specialties. But has a major focus on analytics solutions.

Architecture

With Fabric, we have numerous architectural options. By making strategic choices, we can eliminate the need for data copies across our architecture. Consider the standard architecture using Azure resources. A Data lake, SQL Database and Data Factory.

Here we have 2 copies of the original data. In the data Lake and the SQL Database (Because you copy the data across to transform, create your dimensions, facts etc).

And finally the same imported dims and facts created in SQL DB are imported and stored in Power BI.

This architecture works well, it allows for data scientists to use the data in the data lake and it allows for SQL Stored procedures to be put in place to process the data into the correct analytical (Star) Schemas for Power BI.

However, wouldn’t it be great if you could remove some of the data copies across the resources.

Fabric leverages the medallion architecture

- Bronze layer – Our raw unprocessed data.

- Silver – Cleaned and transformed data

- Gold Layer – Enriched data optimised for analytics.

Even using Fabric, there are lots of opportunities to use specific resources to change your architecture dependent upon the project. For example, you could decide to use Fabrics next generation Data warehouse, designed for high performance and scalability. Excellent for big data solutions. And allows you to do cross database querying, using multiple data sources without data duplication.

However, at this point I have spent my time looking at how we can utilize the delta lake. Creating an architecture that uses Delta Parquet files. Can this be a viable solution for those projects that don’t have a need for the high level ‘big data’ performance of the Fabric Data Warehouse?

There are significant advantages here, as we are reducing the amount of duplicated data we hold.

And of course, Power BI can use Direct Lake connection, rather than Import mode. Allowing you to remove the imported model entirely from Power BI. Even better, with partitioned Delta Parquet files you can have bigger models, only using the files that you need.

This architecture has been used for some initial project work, and the Pyspark code, within Notebooks, has proved itself to be fast and reliable. As a fabric Engineer I would definitely say that if you are a SQL person its vital that you up your skills to include Pyspark.

However, with some provisos, The Data Warehouse can also utilise Direct Lake mode, so sometimes. Its literally the case of, what language do you prefer to work in. Pyspark or SQL?

Task Flows

The Fabric Task flows are a great Fabric feature, and incredibly helpful when setting up a new project.

- You get to visualize your data processes

- Create best practice task flows

- Classify your tasks into Data Ingestion, Data Storage, Data Preparation etc

- Standardise team work and are easy to navigate

Here, the Medallion flow was used, immediately giving us the architecture required to create our resources.

You can either select a previously created task to add to your task flow

Or create a new item. Fabric will recommend objects for the task

One tip from using the medallion task flow. As you can see. Bronze, Silver and Gold Data Lake houses are shown as separate activities. Currently, you can’t create one data lake and add it to each activity.

If you want to use one lake for all three areas, you need to customise the activity flow. As a result, the decision was made to have three delta lake’s working together for the project. But it may not be something you wish to do. So customising the flow may be a better option.

GIT integration

The fabric workspace comes with GIT integration, which offers a lot of benefits. With GIT, you can save your code base to your central repository, allowing for version control. Much better collaboration, better code and peer reviewing. And CI/DC automation.

There are some current issues however, especially with branching, as some branching capabilities are still in preview. For an initial test project a very basic approach was used.

Azure Devops was used for this project

Here, a new Project has been added to Devops: Debbies Training

Visual Studio

Visual Studio was used to clone the repository, but there are lots of other ways you can do this next step, For example GIT Bash.

And connect to the repository that has been created (You need to log in to see your repos)

Click clone and you will then have a local copy of the code base. It doesn’t support everything at the moment but it does support Notebooks, Reports, Semantic Models and Pipelines, which is the focus of our current learning.

Connect Devops to Fabric

Back in the Fabric Workspace go to Workspace Settings

You are now connected to Devops (Note the branch is main)

Later, we want to start using branches when the underlying Fabric logic is better, But for now, we have been using the main branch. Not ideal, but we should see this getting better a little further down the line.

You can now create your resources and be confident that your code is being stored centrally.

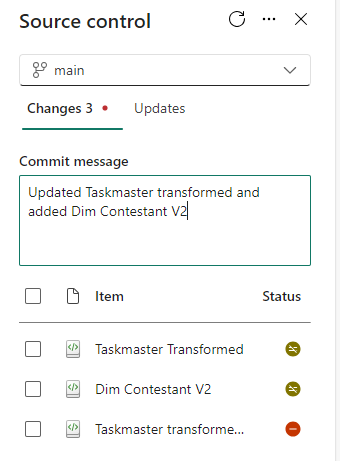

All you need to do is publish changes via the Fabric workspace (Source Control)

Click Commit to commit your changes and change Descriptor

Watch out for updates to this functionality. Especially branching

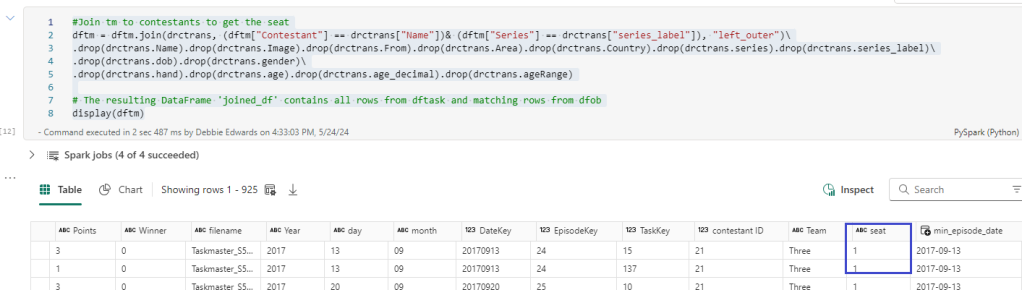

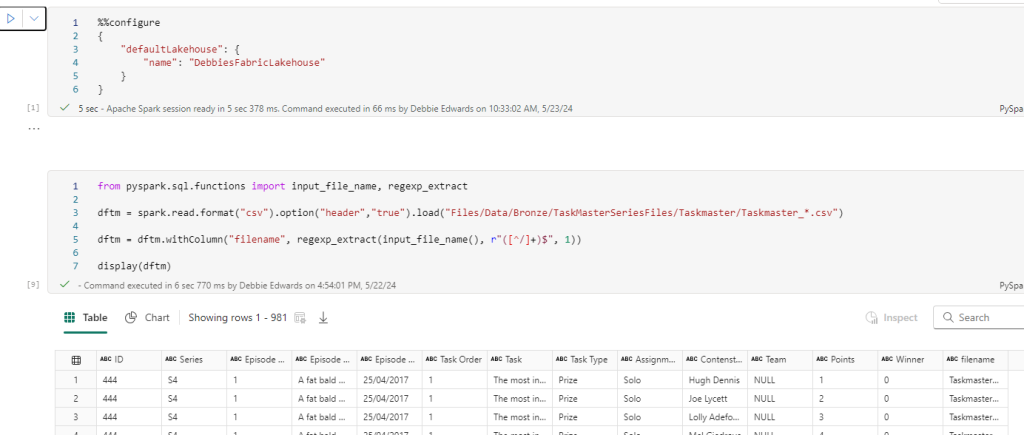

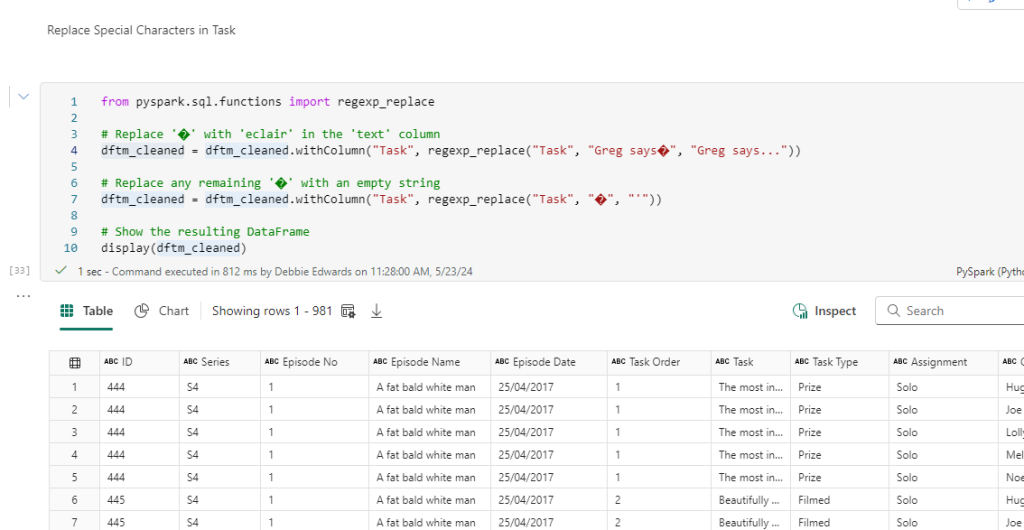

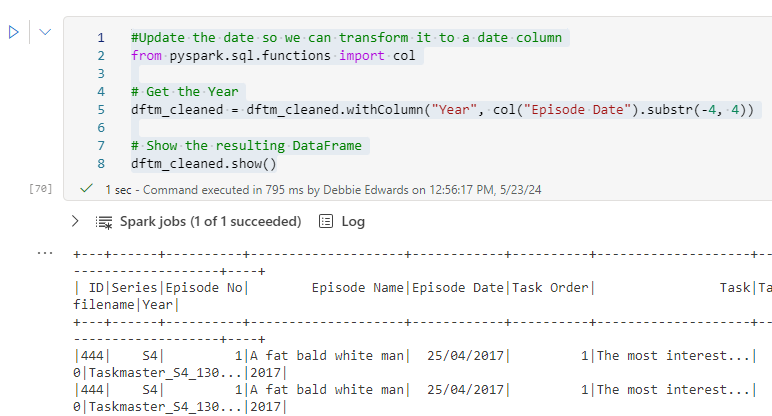

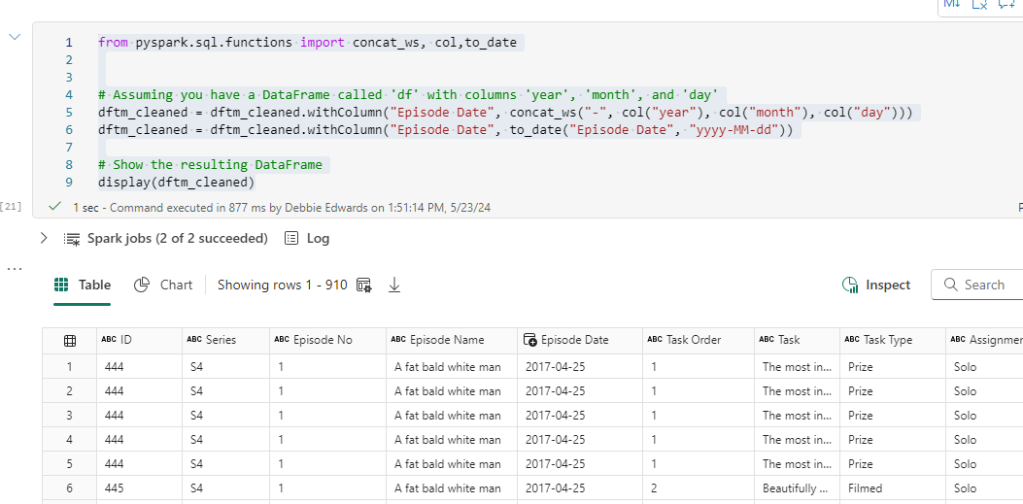

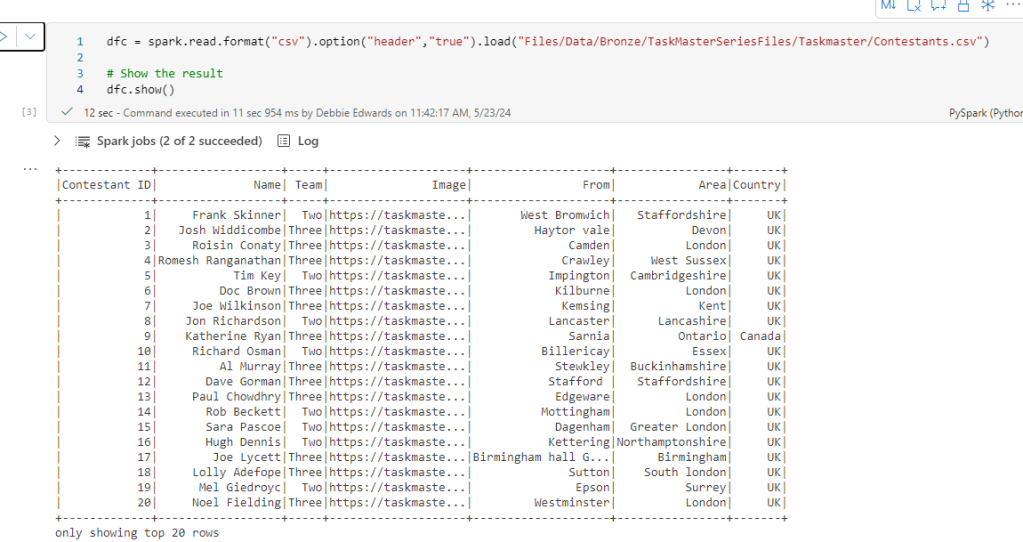

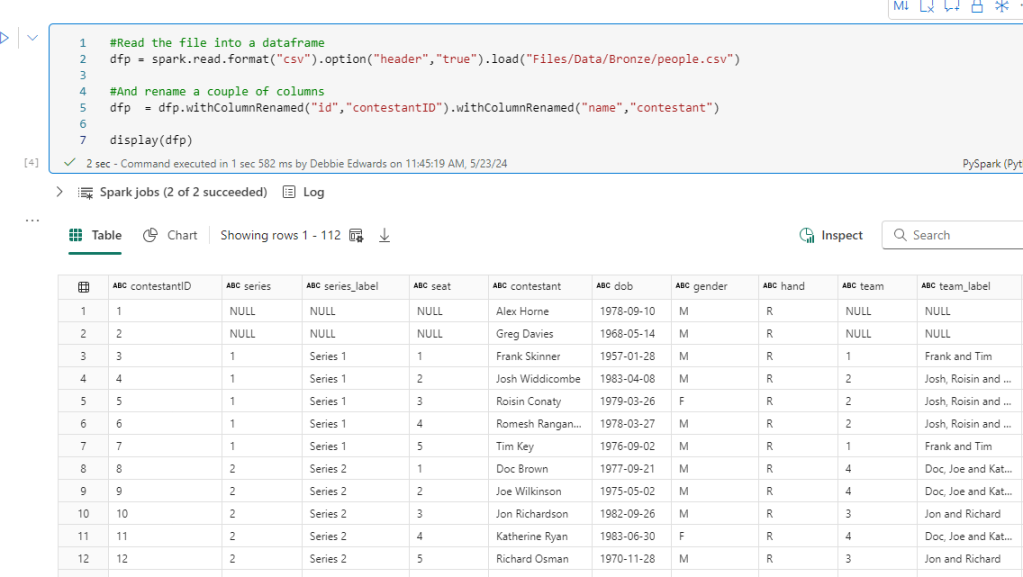

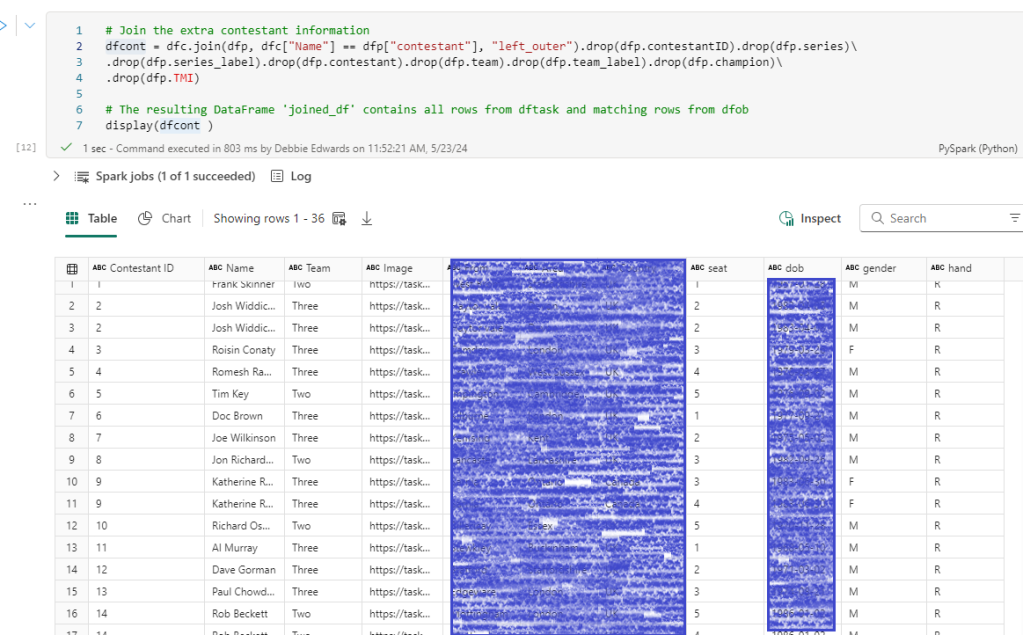

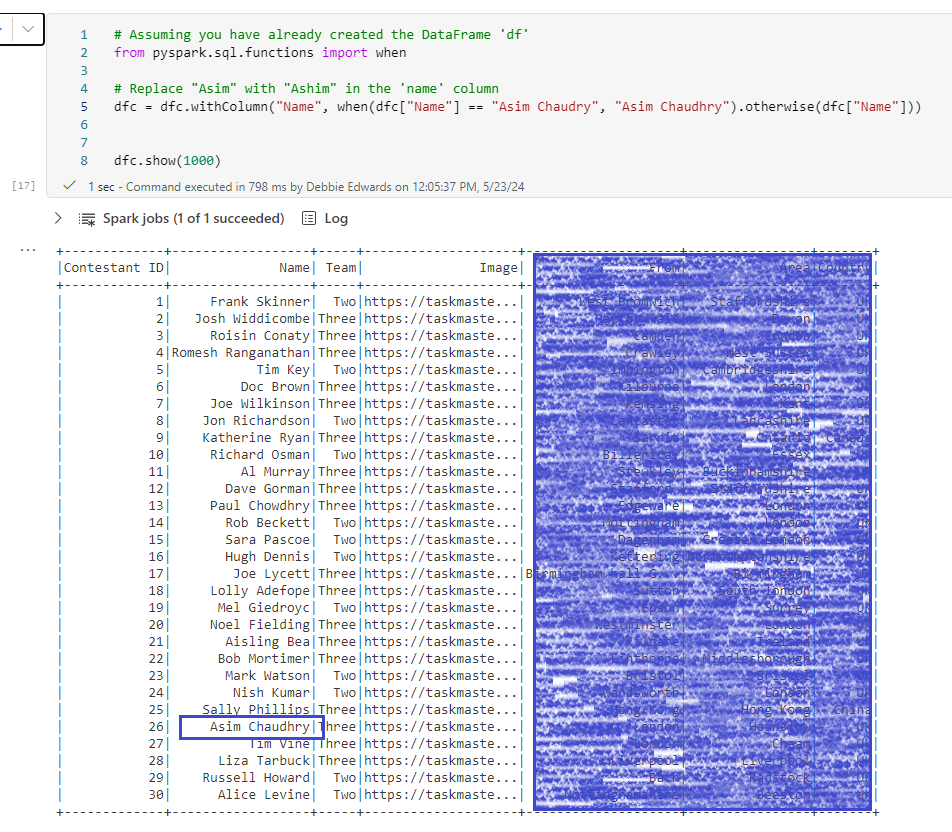

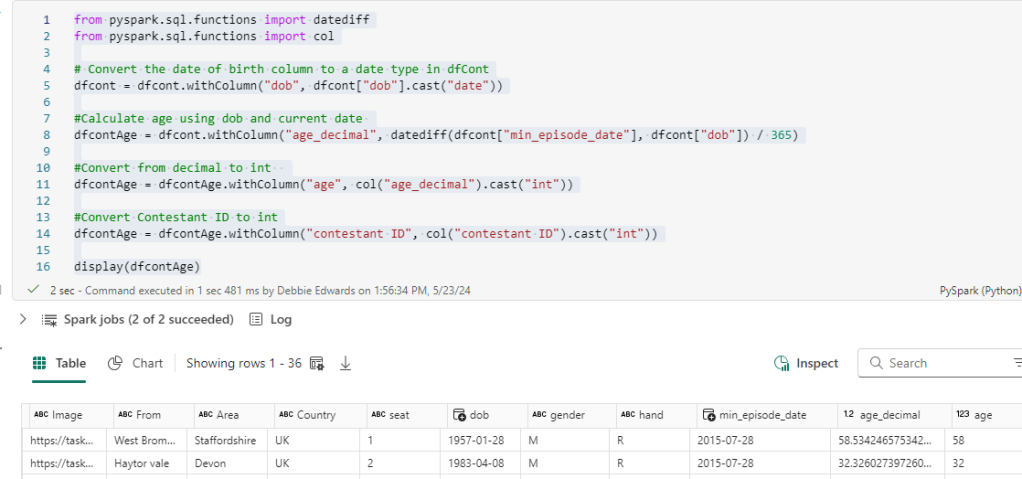

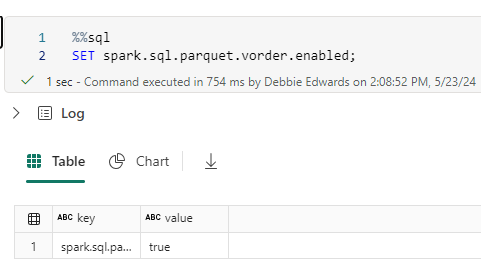

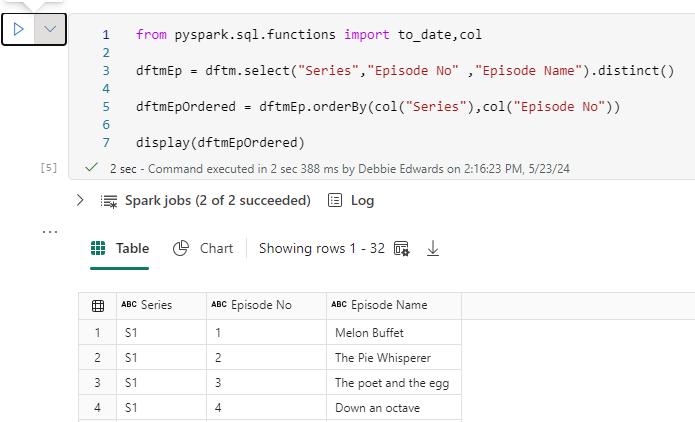

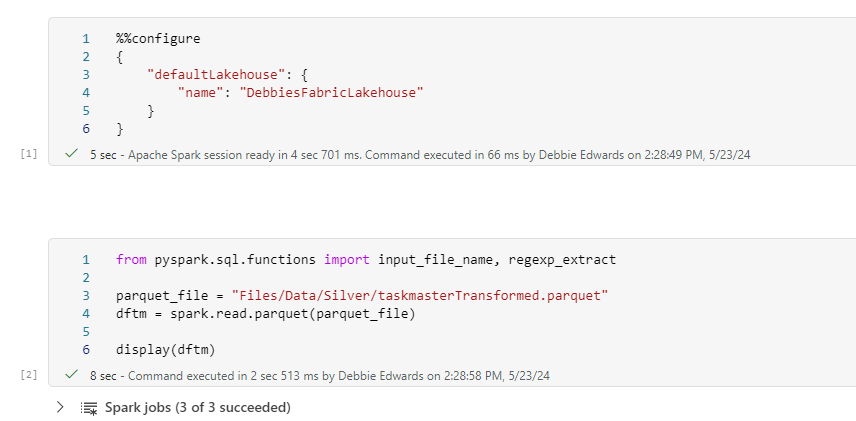

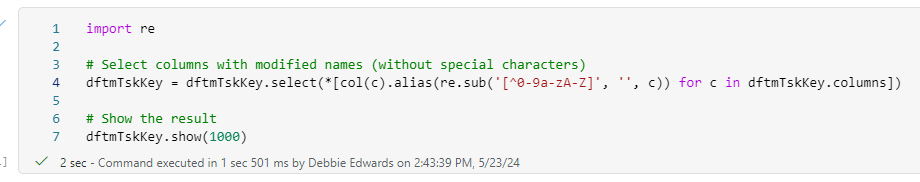

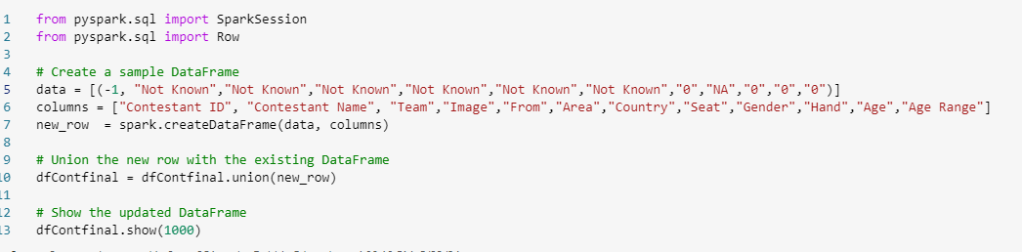

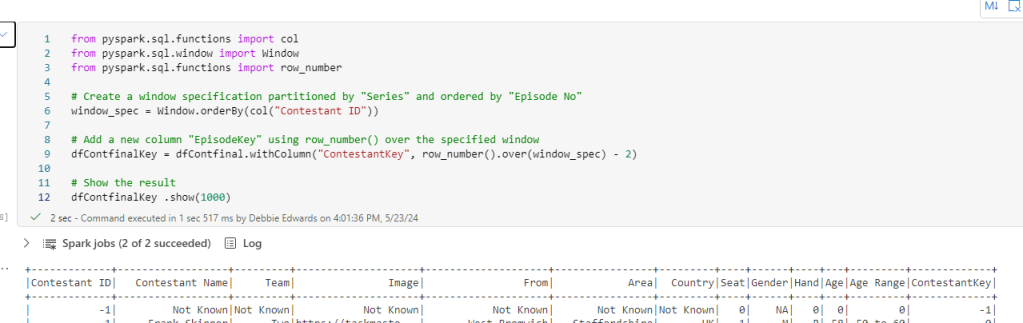

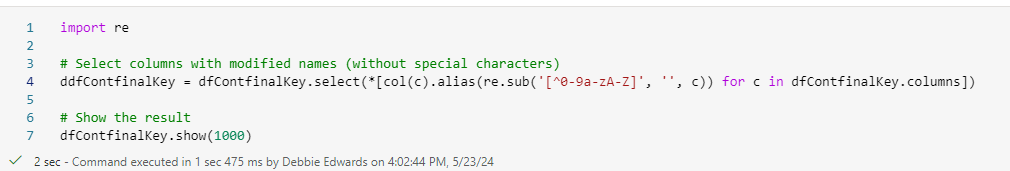

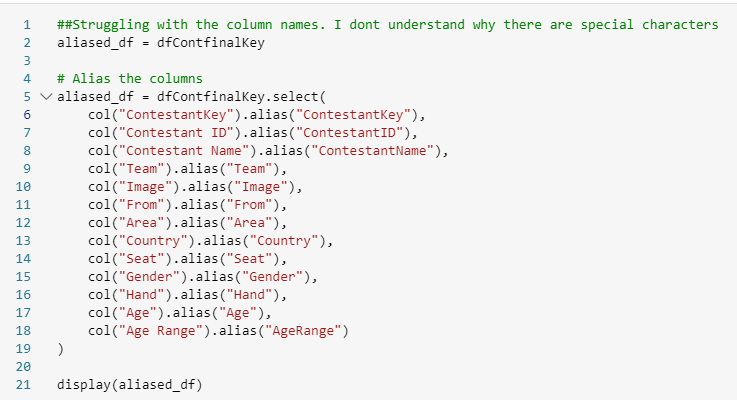

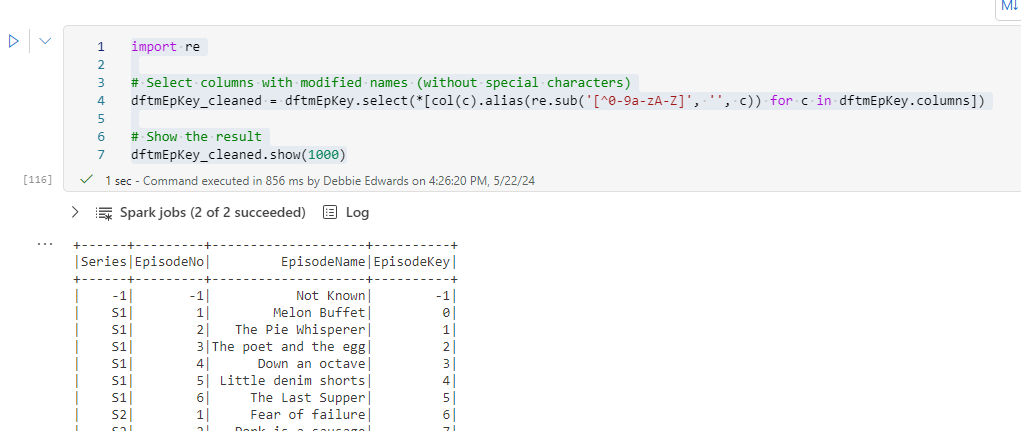

Pyspark and Notebooks

As a SQL developer, I have spent years writing code to create stored procedures to transform data in SQL databases.

SQL, for me is what I think of as my second language. I’m confident with it. Pyspark is fairly new to me. My biggest question was:

Using my SQL knowledge, can I think through a problem and implement that solution with Pyspark.

The answer is, yes.

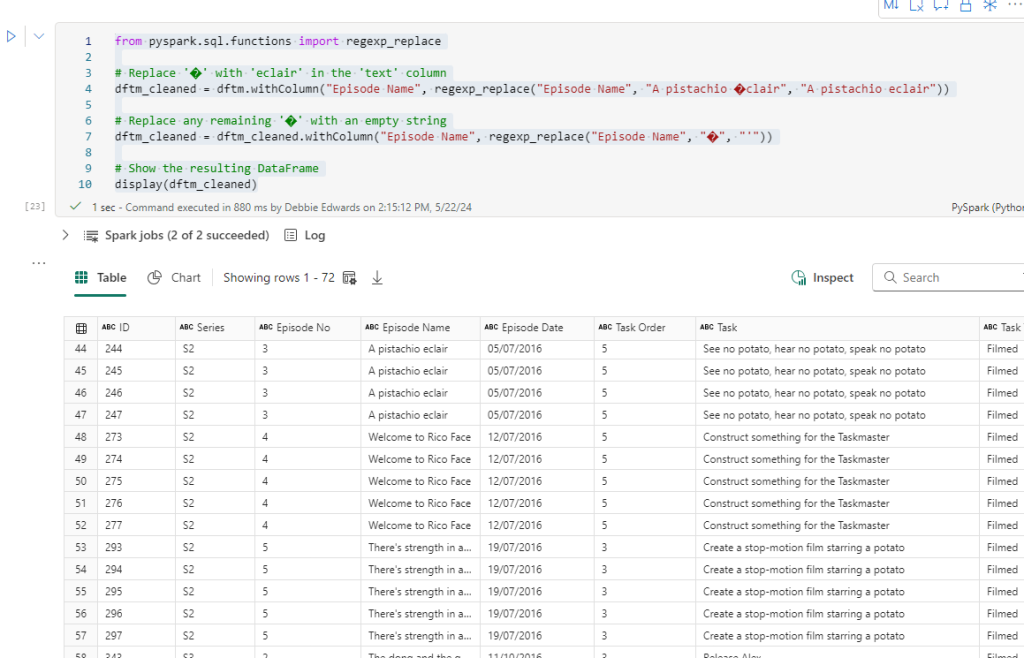

As with anything. Learning new languages can be a steep learning curve. But there is lots of help out there to grips with the new language. For example, CoPilot has been incredibly useful with ideas and code. But, on the whole, you can apply what you know in SQL and use the same solutions in a Pyspark notebook.

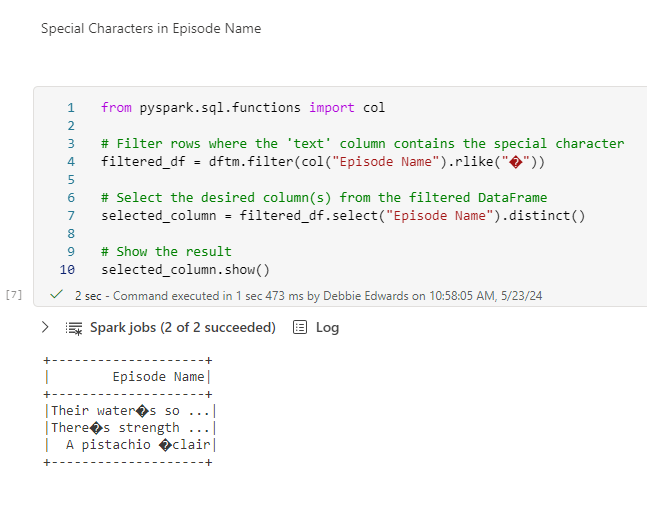

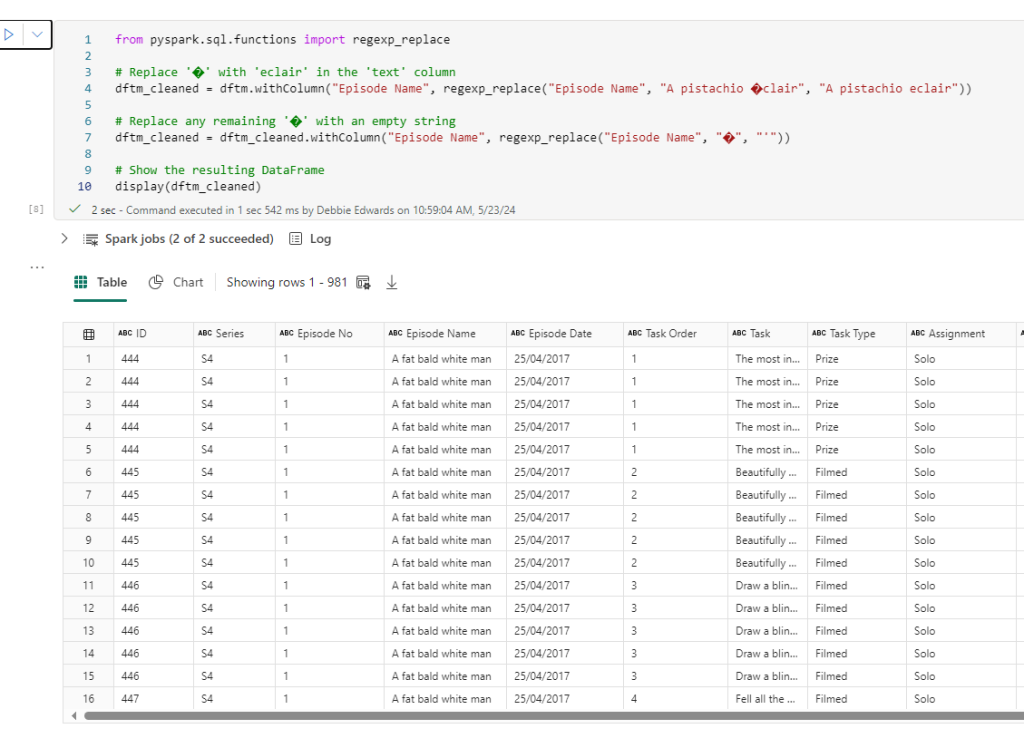

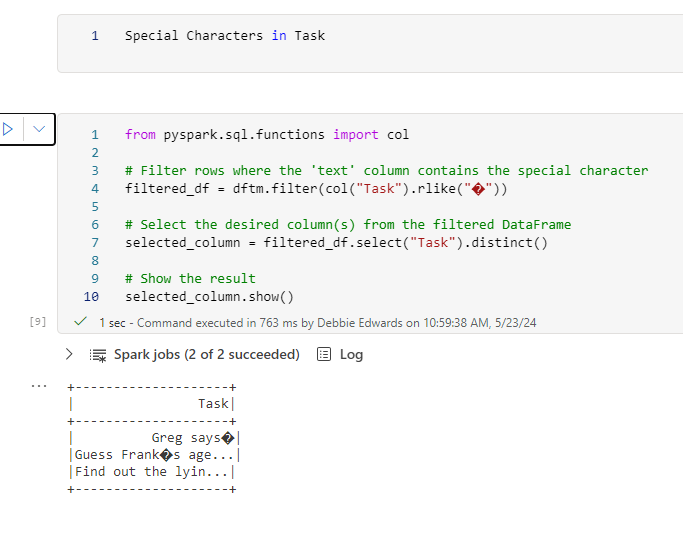

Here are a few Tips

- Pyspark, unlike SQL is CASE sensitive so you have to be much more rigorous when writing code in your notebooks.

- When working with joins in Pyspark. You can significantly speed up the creation of the new data frame by using Broadcast on the smaller table. Broadcast optimizes the performance of your spark job by reducing data shuffling.

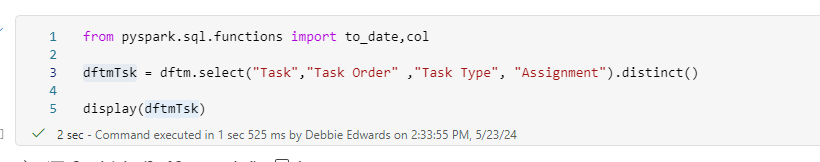

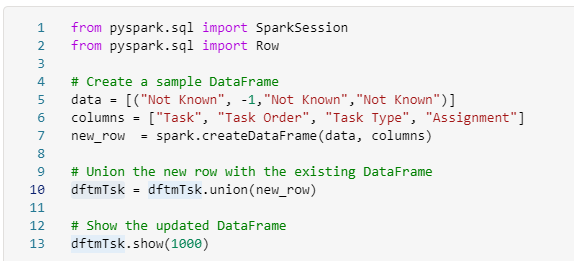

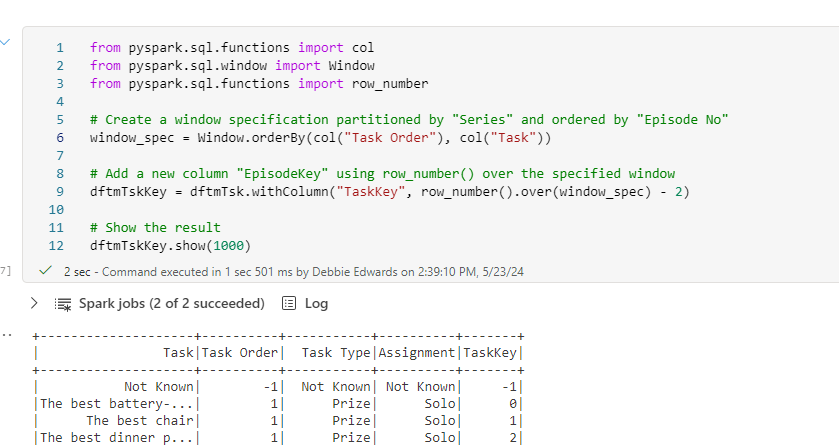

- With SQL, you work with temporary tables and CTE’s (common table expressions). Dataframes replace this functionality, but you can still think of them in terms of your temporary tables.

- SQL, you load the data into tables, With the Lakehouse, you load your data into files. The most common type is Parquet. It’s worth understanding the difference between Parquet and Delta Parquet. We looked at this in detail in the last blog “The Microsoft data Journey so far. From SQL Server, Azure to Fabric”. But we will look at the practicalities of both, a little later.

- Unlike a SQL Stored Procedure where, during development you can leave your development work for a while. Then come back to the same state. The spark session will stop at around 20 minutes so you can’t simply leave it mid notebook. Unless you are happy to run again.

- Start your session in your notebook.

- Click on session status in the bottom left corner

- See the session status in the bottom left corner

Here we can see the timeout period which can be reset.

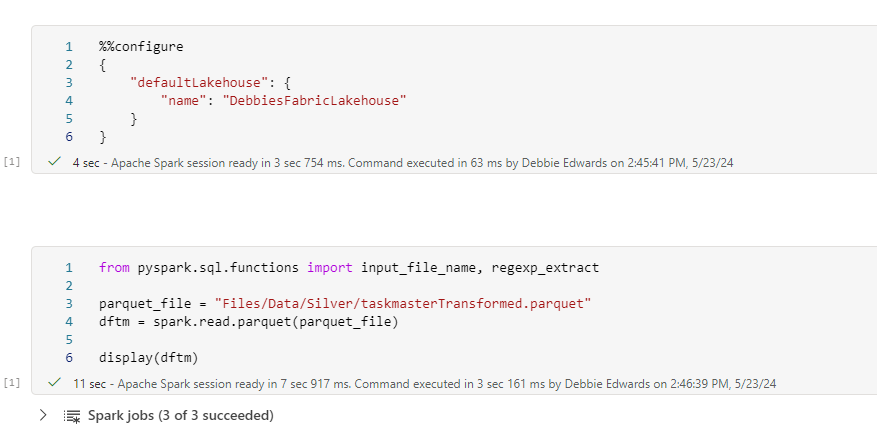

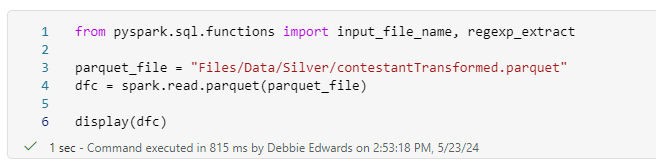

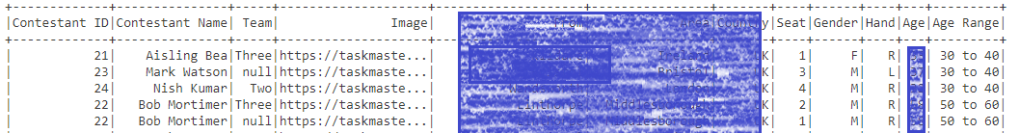

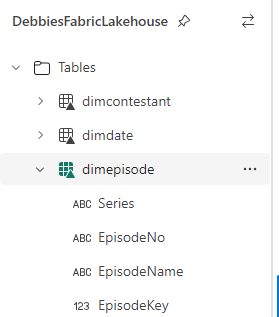

Delta Parquet

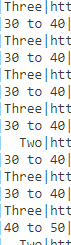

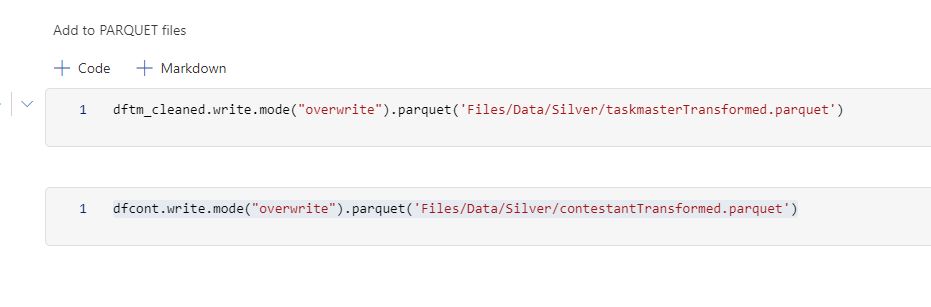

When working with Parquet files. We can either save as Parquet (Saved in the files section of fabric) Or save as Delta Parquet. (Saved in the tables section of Fabric)

Always remember, if you do want to use the SQL Endpoint to run queries over your files, always save as Delta Parquet.

If you want to use the Direct Lake connector to your parquet files for Power BI Semantic Model, again, use Delta Parquet files.

One question was, if you are using a lake house and have the opportunity to create Delta Parquet. Why wouldn’t you save everything with the full Delta components of the parquet file?

There are a few reasons to still go with parquet only.

- Parquet is supported across various platforms. If you share across other systems this may be the best choice.

- Parquet is simple, without the ACID transaction features. This may be sufficient.

- Plain parquet files can offer better performance.

- Parquet files are highly efficient for storage, as they don’t have the delta parquet overheads. A good choice for archiving data.

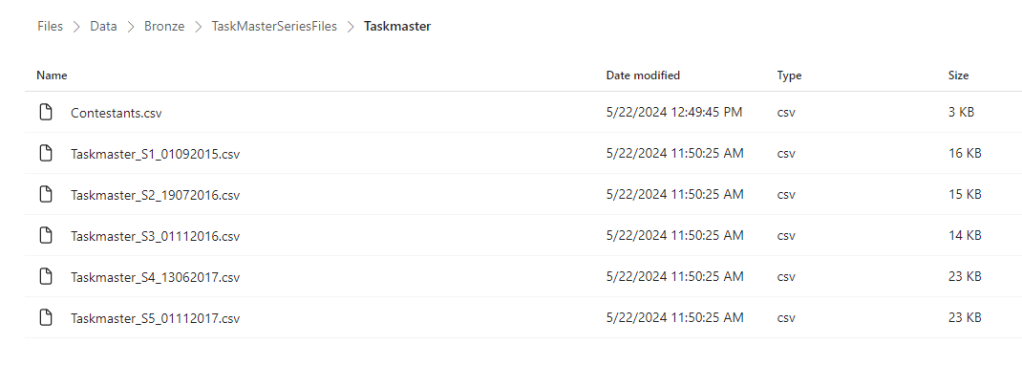

With this in mind. Our project has followed the following logic for file creation

Dims and Facts

Always use Delta Parquet for full ACID functionality and Direct Lake connectivity to Power BI.

Audit Tables

We always keep track of our loads. When the load took place? What file was loaded? How many records? etc. Again, these are held as Delta Parquet. We can use the SQL endpoint if we want to quickly analyse the data. We can also use the Direct Lake connector for Power BI to publish the results to a report.

Even better. Our audit reports contain an issue flag. We create the logic in the Pyspark Notebook to check if there are issues. And if the flag is 1 (Yes) Power BI can immediately notify someone that there may be a problem with the data using Alerts.

Staging tables

A lot of the basic changes are held in transformed tables. We may join lots of tables together. Rename columns. Add calculated columns etc. Before loading to dims and facts. Staging tables are held as Parquet only. Basically, we only use the staging tables to load dim and fact tables. No need for the Delta overheads.

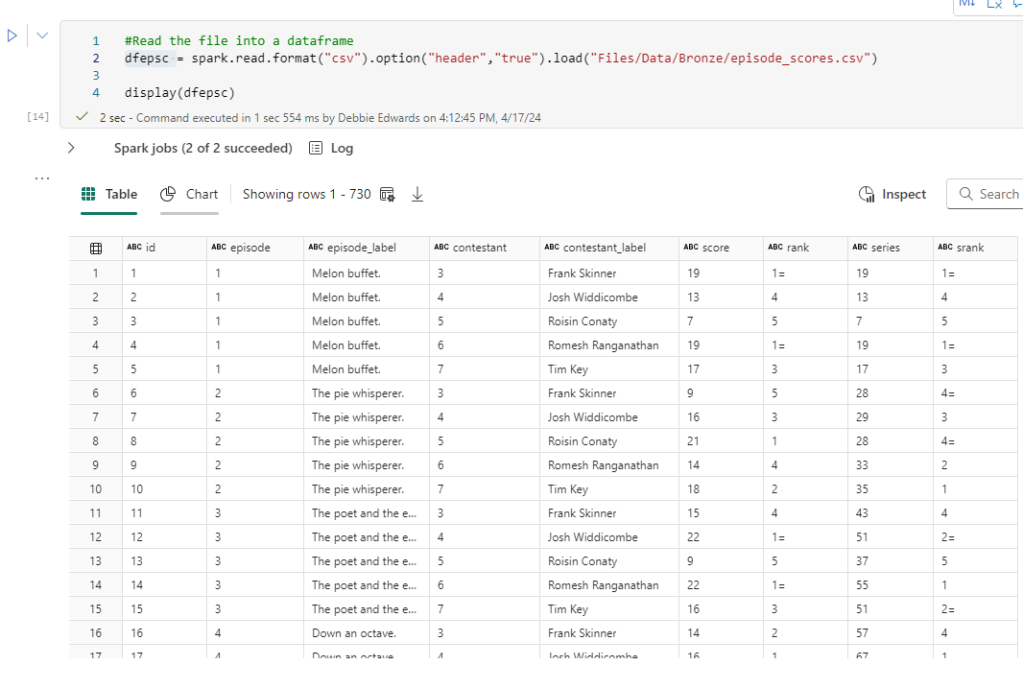

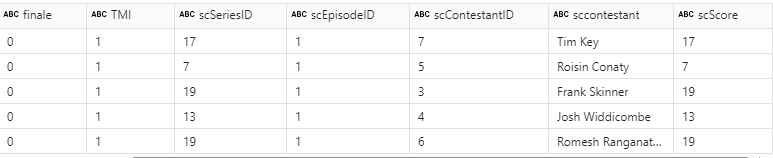

Pipelines

When you create your notebooks, Just like SQL stored Procedures, you need a way of orchestrating their runs. This is where Data Factory came in working with Azure. Now we have Pipelines in Fabric, based on the pipelines from Azure Synapse.

I have used Data factory (and its predecessor Integration Services) for many years and have worked with API’s. The copy activity. Data Mappings etc. What I haven’t used before is the Notebook activity.

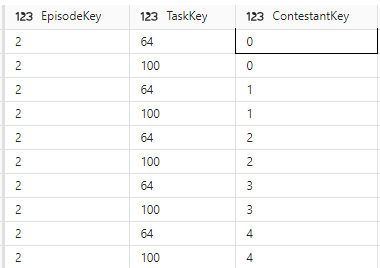

There are 5 notebooks, which need to be run consecutively.

Iterate through Each Notebook

When creating pipelines, The aim is to reuse activities and objects. So, rather than having 5 activities in the pipeline. One for every notebook. We want to use 1 activity that will process all the notebooks.

In the first instance. We aren’t going to add series 5 into the Data Lake.

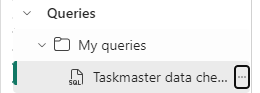

Create a csv file

Also get the IDs of the workspace and the notebooks. These were taken from the Fabric url’s. e.g.

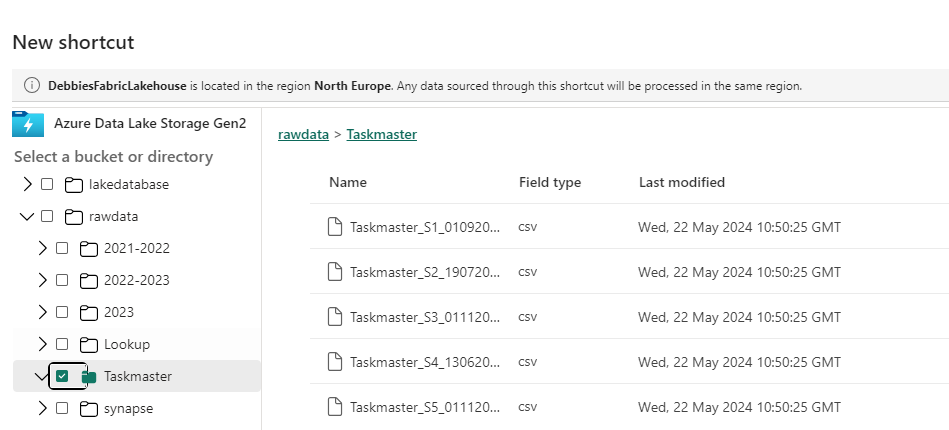

The file is added into the bronze delta lake

Now we should be able to use this information in the pipeline

Create a lookup

In the pipeline we need a Lookup activity to get the information from the JSON file

Add a ForEach Activity

Drag and drop a ForEach activity onto your pipeline canvas and create an On Success Relationship between this and the Lookup.

Sequential is ticked because there are multiple rows for each notebook and we want to move through them sequentially.

Set the ‘Items’ in Settings by clicking to get to the pipeline expression builder

We are using the output.value of our lookup activity.

@activity(‘GetNotebookNames’).output.value

Configure the Notebook Activity Inside ForEach

Inside the Foreach. Add a Notebook activity

Again, click to use the Dynamic content expression builder to build

Workspace ID: @item().workspaceID

Notebook ID: @item().notebookID

Note, when you first set this up you see Workspace and Notebook Name. It changes to ID, I think this is because we are using the item() but it can be confusing.

This is the reason ID has been added into the csv file. But we still wanted the names in the file in order to better understand what is going on for error handling and testing.

- @item(): This refers to the current item in the iteration which is a row. When you’re looping through a collection of items, @item() represents each individual item as the loop progresses.

- .notebookID: This accesses the notebookID property of the current item. And the notebookID is a column in the csv file

Running the pipeline

You can check the inputs and outputs of each activity.

If it fails you can also click on the failure icon.

The above information can help you to create a simple pipeline that iterates through Notebooks.

There are other things to think about:

- What happens if anything fails in the pipeline?

- Can we audit the Pipeline Processes and collect information along the way?

Within the Notebooks, there is Pyspark code that creates auditing Delta Parquet files which contain information like: Date of Load, Number of rows, Name of activity etc. But you can also get Pipeline specific information that can also be recorded.

Currently this Pipeline can be run and it will process either 1 file or multiple files dependant upon what is added to the bronze lakehouse. The Pyspark can deal with either the very first load or subsequent loads.

With this in place, we can move forward to the Semantic Model and Power BI reporting

Conclusion

Most of the time so far has been spent learning how to use Pyspark Code to create Notebooks and our Delta Parquet files. There is so much more to do here, Data Warehousing, Delta parquet file partitioning. Real time data loading, Setting up off line development for code creation etc.

The more you learn, the more questions you have. But for the time being we are going to head off and see what we can do with our transformed data in Power BI.

In the Part 2, we will look at everything related to Power BI in Fabric.