Calculation groups look like a game changer, but are they? These have been available for Analysis Services tabular models for a while. Now Tabular editor Lets you build calculation groups for Power BI.

Taking one of our current pbix files as an example.

There are metrics for number of events, Financial metrics, timing metrics. Each one has to be added to Year to Date, Last month, last Year, Month to date etc metrics.

The same measures are repeated over and over again for the different metrics in the report.

And adding metrics in Power BI desktop isn’t a fast process. Its actually quite fiddly so adding the first lot of DAX required always takes a lot of time.

The Solution

As from July 2020 you can reach tabular editor Via External tools

- You must have them installed at the right version for this to work.

- You must enable Store datasets using enhanced metadata format in Options > Preview features

You are taken straight to the model you are connected to

Right click on Tables. Create New and Calculation Group

Time Intelligence

The best example to look at is time intelligence

This example is renamed to time Intelligence. What has been created is essentially a single column table.

the rows are based on all the calculation items that I already have in this model.

I initially recreate all the metrics from source as SUMs so they can be used within measures. Along with this I may have other base calculations like Distinct Count of IDs to make a number of events. Minimum, Maximum, Average etc.

right click Calculation items and choose new calculation item

In this example, go back to the original pbix and copy a time intelligence DAX measure

YTD Sales = TOTALYTD([Total Sales],DimDate[FullDateAlternateKey])

Total Sales is a measure created with SUM() using the Sales Amount metric from the data source

The measure is replaced with the scalar function SELECTEDMEASURE()

Instead of having to recreate this DAX over all your ID’s where necessary you only need to do it once

Scalar function – a function that returns one single value

The base DAX in this data set can now be added into Tabular Editor

You can imagine starting a prototype model with DAX for a specific metric. Then recreating as calculation groups so you can add all your other metrics without having to copy and paste the same measures over and over again

Tabular Editor currently has no intellisense so you wouldn’t write and test your DAX in here. this would be done in Desktop. If you wrote incorrect DAX in here and Saved to Power BI, you would then see an error icon in Tabular Editor.

Adding more time Intelligence from the original report

The rows are based on all the calculation items that are already have in this example model.

However the DAX needed to be amended because previously the Year to date measure was used to create variance (Two separate measures). We need to be able to give each measure the underlying measure by using variables in order to use them successfully.

So for example

YTD Sales Var % = DIVIDE([YTD Sales Var],[LY YTD Revenue])

Note that YTD Sales var and LY YTD Revenue Measures have already been created over the original year to date measure. We cant have this so we need to move to

YTD Sales Var % = VAR CY = [Total Sales]

VAR TYTD = TOTALYTD([Total Sales],DimDate[FullDateAlternateKey])

VAR LYTD = CALCULATE(

TOTALYTD([Total Sales],DimDate[FullDateAlternateKey])

,SAMEPERIODLASTYEAR(DimDate[FullDateAlternateKey]))

VAR VYTD = TOTALYTD([Total Sales],DimDate[FullDateAlternateKey])-

CALCULATE(

TOTALYTD([Total Sales],DimDate[FullDateAlternateKey]),

SAMEPERIODLASTYEAR(DimDate[FullDateAlternateKey]))

RETURN DIVIDE(VYTD,LYTD)

Within this measure we call the base measure and create the variance% by calling variables.

Now these time Intelligence measures can be created in Tabular Editor

Notice that you cant use another variable within a variable. So our Total year to date has to be re added within the last year to date measure.

If we were doing this without calculation groups in mind we would simply reference the year to date measure we had created

Again, Notice how we cant reuse a variable within a variable. So LTYD needs the full DAX inside the SAMEPERIODLASTYEAR DAX.

And Year to Date variance requires both last year to date and total year to date. this makes your DAX more complex than simply using a measure already set up.

In the above instance I could take out TYTD Variable but honestly. Im leaving it there as a reminder of what the DAX is for Year to Date

As you can see, each measure uses one base measure

And for the percentages I added format String Expression “0.00%”

Save the calculation group into the main desktop file

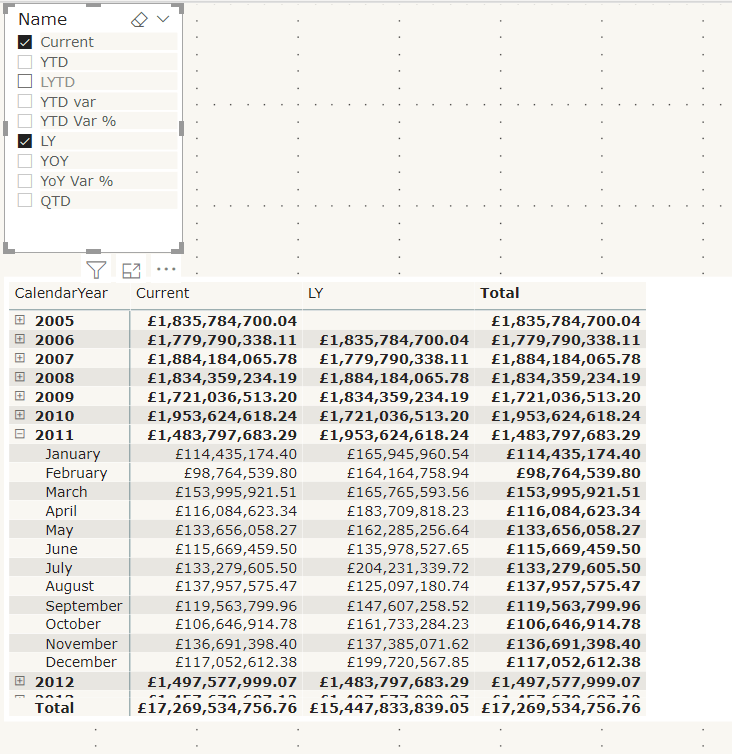

Lets see the calculated measure group in action using a matrix

Name from the time Intelligence group has been added to columns on the matrix.

Year and Month are in Rows

Total Sales, the base measure has been added to values

Name has also been added to a Slicer so you can turn off those you aren’t interested in.

To me, the big win of calculation groups is being able to create one measure and using it across multiple measures instead of creating the same measure over and over again.

And quickly switch to quantity by adding that measure to value

Issues with using calculation groups

- formatting each item is much more difficult in tabular editor because you have to know the code. There is no help for creating these expressions.

- Similarly, you cant develop DAX Here, you need to do it first within power BI Desktop

- Your DAX has to become much more complex, rather than creating a measure and then referencing this measure within another measure. Like Year to Date and Last Year to Date

You cant create the matrix with Months in columns

This is using the measures created in the data set. As you can see, the Months are shown as columns and the measures are also displayed

You cant do this with column groups.

Lets have a look at some visuals already created with time Intelligence to see if we can recreate

Variance Waterfall chart

This is an example of a waterfall variance chart for year to date. If we didn’t have measures outside of a calculation group, could we still pull off the exact same solution?

Table: No you cant recreate the table. You would have to move to a matrix

With a filter on name to the measures you are interested in. It doesn’t appear that you can turn off grand totals though so we have some information there that we don’t need. the Total column.

So, ok but not great

Waterfall visual; there doesn’t appear to be any way you can recreate this. so to create my variance visuals there has to be a measure that can be used.

Conclusion

Calculation groups seem to only for for one visual. the Matrix. Where you can add the base measure to values and the Name to columns.

Even the matrix cant be fully configured to how it would normally be shown with months in columns and the measures underneath.

In order to use time intelligence for all other visuals, there still needs to be measures in your model to use.

It would be a great idea if you could fully get rid of your duplicated measures but you cant.

Because of this, I still don’t have the results I need to spend time creating calculation groups.

Other people seem to be seriously excited about this though so maybe I am missing something. Please let me know if I am, I would be very interested to get another point of view